Taking action on climate is about a lot more than our energy economy. Climate disruption is the leading threat to our built environment, an accelerant of armed conflict, and a leading cause of mass migration. Its effects intensify and prolong storms, droughts, wildfires, and floods — resulting in the US spending as much on disaster management in 2017 as in the three decades from 1980 to 2010.

Out of control wildfire approaching Estreito da Calheta, Portugal. September 2017. Photograph: Michael Held

Fiscal conservatism and national security require a smart, focused, effective solution that protects our economy and our values.

Political division between the major parties in Washington has left the burden of achieving that solution largely on Democratic administrations using regulatory measures that — for all their smart design and ambition — cannot be transformational enough to carry us through to a livable future.

Conservatives say the nation needs an insurance policy. Business leaders want to future-proof their operations and investments. Young people are demanding intervention on the scale of the Allies’ efforts to rebuild Europe after World War II.

The International Monetary Fund — whose mission is to ensure national dysfunction doesn’t undermine the solvency of public budgets and lead to failed states — warns that nations that depend heavily on publicly subsidized fossil fuels are endangering their future solvency by investing in a way that destroys future economic resilience. Resilience intelligence requires diversification and innovation on a massive scale.

The rapid expansion of green bonds is making clear the deep need for clean economy holdings among major banks and institutional investors. Climate-smart finance, still a new concept, is expected to be the standard for both public and private-sector actors at all levels within 10 to 20 years.

Republican former Secretaries of the Treasury James Baker and George Shultz have called for a carbon dividends strategy, because:

- it avoids new regulation,

- it abides by conservative principles of market efficiency, and

- it leverages improvements to the Main Street economy to ensure a future of real energy freedom.

Main Street economies suffer when too much of the money in circulation flows to finance, without clear incentives to lend to small businesses. The steadily rising monthly carbon dividend makes sure more of the money in circulation flows through small businesses, locking in that incentive and making the whole economy more efficient at creating wealth for the average household. Photograph: Joseph Robertson

Unpaid-for pollution and climate disruption limit our personal freedom and then, by adding cost and risk to the whole economy, undermine our collective ability to defend our freedom and secure future prosperity. Even with record oil and gas production, the US still depends heavily on foreign regimes hostile to democracy that manipulate supply and undermine the efficiency of our everyday economy.

Energy freedom means reliable, everywhere-active low-cost clean energy, answering the call of expanded Main Street economic activity.

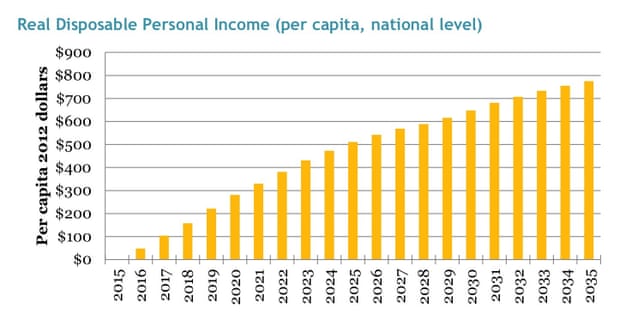

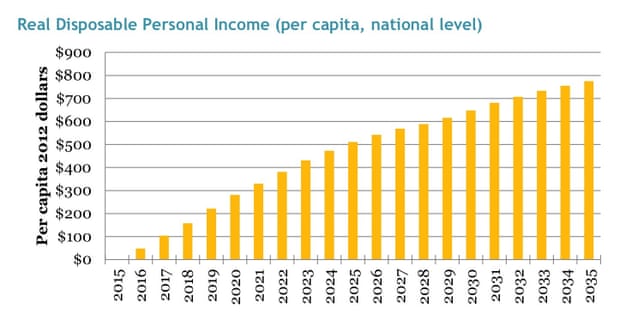

A study by Regional Economic Models, Inc., which modeled the interacting economy-wide impacts of monthly household carbon dividends found real disposable personal income rising for at least 20 years after the first dividends show up in the mail. Details at https://ift.tt/2lUxIs1 Illustration: Regional Economic Models, Inc.

Ask any small business owner if they would rather have higher or lower hidden business costs built into everything they buy from their suppliers. Of course, they would prefer lower hidden costs and risks, and for consumers to have more money in their pockets.

That is how carbon dividends work.

- A simple, upstream fee, paid at the source by any entity that wants to sell polluting fuels that carry such hidden costs and risk. This is administratively simple, light-touch, economy-wide, and fair to all.

- 100% of the revenues from that fee are returned to households in equal shares, every month. This ensures the Main Street economy keeps humming along.

- Because both the fee and the dividend steadily rise, pollution-dependent businesses — and the banks that finance them — can see the optimal rate of innovation and diversification to liberate themselves from the subsidized pollution trap. The whole economy becomes more competitive and more efficient at delivering real-world value to Main Street.

- To ensure energy intensive trade-exposed industries are not drawn away by other nations keeping carbon fuels artificially cheap, a simple border carbon adjustment ensures a level playing field, while adding negotiating power to US diplomatic efforts, on every issue everywhere.

from Skeptical Science https://ift.tt/2zdfBat

Taking action on climate is about a lot more than our energy economy. Climate disruption is the leading threat to our built environment, an accelerant of armed conflict, and a leading cause of mass migration. Its effects intensify and prolong storms, droughts, wildfires, and floods — resulting in the US spending as much on disaster management in 2017 as in the three decades from 1980 to 2010.

Out of control wildfire approaching Estreito da Calheta, Portugal. September 2017. Photograph: Michael Held

Fiscal conservatism and national security require a smart, focused, effective solution that protects our economy and our values.

Political division between the major parties in Washington has left the burden of achieving that solution largely on Democratic administrations using regulatory measures that — for all their smart design and ambition — cannot be transformational enough to carry us through to a livable future.

Conservatives say the nation needs an insurance policy. Business leaders want to future-proof their operations and investments. Young people are demanding intervention on the scale of the Allies’ efforts to rebuild Europe after World War II.

The International Monetary Fund — whose mission is to ensure national dysfunction doesn’t undermine the solvency of public budgets and lead to failed states — warns that nations that depend heavily on publicly subsidized fossil fuels are endangering their future solvency by investing in a way that destroys future economic resilience. Resilience intelligence requires diversification and innovation on a massive scale.

The rapid expansion of green bonds is making clear the deep need for clean economy holdings among major banks and institutional investors. Climate-smart finance, still a new concept, is expected to be the standard for both public and private-sector actors at all levels within 10 to 20 years.

Republican former Secretaries of the Treasury James Baker and George Shultz have called for a carbon dividends strategy, because:

- it avoids new regulation,

- it abides by conservative principles of market efficiency, and

- it leverages improvements to the Main Street economy to ensure a future of real energy freedom.

Main Street economies suffer when too much of the money in circulation flows to finance, without clear incentives to lend to small businesses. The steadily rising monthly carbon dividend makes sure more of the money in circulation flows through small businesses, locking in that incentive and making the whole economy more efficient at creating wealth for the average household. Photograph: Joseph Robertson

Unpaid-for pollution and climate disruption limit our personal freedom and then, by adding cost and risk to the whole economy, undermine our collective ability to defend our freedom and secure future prosperity. Even with record oil and gas production, the US still depends heavily on foreign regimes hostile to democracy that manipulate supply and undermine the efficiency of our everyday economy.

Energy freedom means reliable, everywhere-active low-cost clean energy, answering the call of expanded Main Street economic activity.

A study by Regional Economic Models, Inc., which modeled the interacting economy-wide impacts of monthly household carbon dividends found real disposable personal income rising for at least 20 years after the first dividends show up in the mail. Details at https://ift.tt/2lUxIs1 Illustration: Regional Economic Models, Inc.

Ask any small business owner if they would rather have higher or lower hidden business costs built into everything they buy from their suppliers. Of course, they would prefer lower hidden costs and risks, and for consumers to have more money in their pockets.

That is how carbon dividends work.

- A simple, upstream fee, paid at the source by any entity that wants to sell polluting fuels that carry such hidden costs and risk. This is administratively simple, light-touch, economy-wide, and fair to all.

- 100% of the revenues from that fee are returned to households in equal shares, every month. This ensures the Main Street economy keeps humming along.

- Because both the fee and the dividend steadily rise, pollution-dependent businesses — and the banks that finance them — can see the optimal rate of innovation and diversification to liberate themselves from the subsidized pollution trap. The whole economy becomes more competitive and more efficient at delivering real-world value to Main Street.

- To ensure energy intensive trade-exposed industries are not drawn away by other nations keeping carbon fuels artificially cheap, a simple border carbon adjustment ensures a level playing field, while adding negotiating power to US diplomatic efforts, on every issue everywhere.

from Skeptical Science https://ift.tt/2zdfBat