In the few weeks since it was introduced as a non-binding resolution before the U.S. Senate and House of Representatives, the Green New Deal (GND) Resolution has generated more discussion and coverage of climate change – positive and negative – among, by, and aimed at policymakers than we’ve seen in more than a decade.

The nonbinding initiative introduced by Rep. Alexandria Ocasio-Cortez (D-NY) and Edward Markey (D-MA) proposes embarking on a 10-year mobilization aimed at achieving zero net greenhouse gas emissions from the United States. The mobilization would entail a massive overhaul of American electricity, transportation, and building infrastructure to replace fossil fuels and improve energy efficiency, leading some to call it unrealistic, idealistic, politically impossible, and “socialistic.”

Analysis

Proponents of GND portray it as an early focus for meaningful climate policy discussion if political winds lead to changes in 2020 for the presidency and the Senate majority. They say the GND is the first proposal to grasp the scale and magnitude of the risks posed by the warming climate. And while begrudgingly accepting the insurmountable odds against full enactment before 2021 at the earliest, they see it as a worthwhile and long-overdue discussion piece.

Many commentators and policy analysts argue that the changes it calls for would be too expensive, radical, and disruptive. Others have argued that anyone who doesn’t support this sort of emergency transition away from fossil fuels is in denial about the magnitude of the climate problem. Many are confused about the Resolution’s vague contents, in part because Ocasio-Cortez’s office also released an inaccurate fact sheet that subsequently had to be retracted. That document provided early and low-hanging targets for those disposed to wanting to dampen GND enthusiasm.

A nonbinding ‘sense of the Senate’ resolution

Critically, GND must be recognized as a non-binding “sense of the Senate/House” resolution. It is not intended as proposed legislation, and certainly not as a specific climate policy bill. Think of it as being more of a framework on which to build actual climate legislation. In effect, a “yes” vote in either the Senate or the House would signify acceptance of climate change as a sufficiently urgent threat to merit full consideration of an expansive 10-year mobilization to transition away from polluting fossil fuels. In addition, the resolution isn’t intended to be exclusionary: at least five House co-sponsors are also co-sponsoring a revenue-neutral carbon tax bill (the Energy Innovation and Carbon Dividend Act).

Whether and exactly when the GND resolution will come to a full vote remains unclear, but Senate Majority Leader Mitch McConnell (R-KY) has said he will bring it to a vote in the Senate. It would likely pose an uncomfortable vote for those potentially vulnerable Democrats up for re-election in 2020 in “red” or coal-dependent states.

For Americans and their elected representatives, the decision whether to support this fundamentally transformative and sweeping resolution – provisions of which go well beyond those directly applying to climate change to include economic and social equity issues – hinges on four key factors. For politicians, the political considerations may weigh most heavily, but let’s deal with those last.

Science and physical considerations

The first consideration is the easiest from a scientific perspective: How much more global warming can occur before its net physical impacts become unacceptably negative?

The science community’s answer is that we’ve already passed that point; that it’s time to act now. Regions around the world are already experiencing more and more severe extreme weather events like heat waves, droughts, wildfires, and floods.

A paper recently published in Nature Communications found that Atlantic hurricanes are undergoing more rapid intensification as a result of global warming. Sea-level rise poses a threat to coastal communities and island nations. The one-two punch of warming and acidifying oceans is killing coral reefs, which are home to 25 percent of marine life. The recent IPCC Special Report found that “Coral reefs would decline by 70-90 percent with global warming of 2.7°F (1.5°C), and more than 99 percent would be lost with 3.6° F (2°C).” Species are dying out at a rate similar to past mass extinction events, with a new study finding that 40 percent of insect species are threatened with extinction. (For a breakdown of climate impacts in each region of the country, the Fourth National Climate Assessment is a wonderful resource.)

In short, if physical impacts were the only consideration, we would want to halt (and even reverse) climate change as quickly as possible. Of course, that’s not the case, which brings us to the second category.

Economic considerations

In a capitalist society, economic considerations are of course important to Americans. The projects involved in the GND mobilization would cost trillions of dollars, but curbing climate change could also prevent trillions of dollars in damages globally.

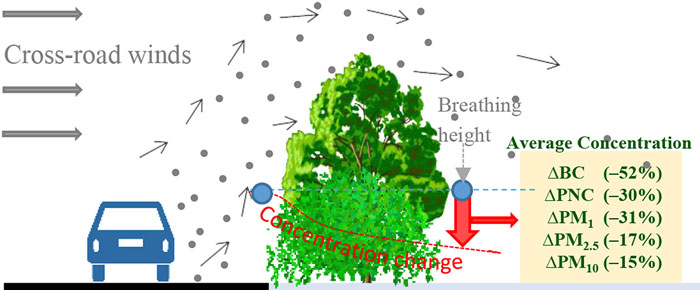

The GND includes a proposed jobs guarantee, envisioning that huge employment opportunities would arise to bring about the needed infrastructure overhauls. The transition away from fossil fuels would also yield further economic benefits, in terms of costs avoided, by reducing other pollutants, leading to cleaner air and water and healthier Americans. A 2017 study headed by David Coady at the International Monetary Fund estimated that fossil fuel air pollution costs the United States $206 billion per year and that when adding all subsidies and costs, the country is spending $700 billion annually on fossil fuels – more than $2,000 per person every year.

GND opponents counter that the economic costs of a vast 10-year mobilization would exceed the resulting economic benefits, but Stanford University researcher Jonathan Koomey suggests that we can’t predict just how fast of a transition to a clean energy economy would be optimal. Given the difficulties predicting technological breakthroughs and given that pathways with very different energy mixes can end up with similar costs, it’s impossible to say if the U.S. would be wealthier – from a strictly financial perspective – in a world that’s 2 or 3 or 4 degrees hotter.

People, and perhaps in particular politicians, tend to focus most on these economic considerations (and often just on costs while ignoring resulting benefits) to the exclusion of the others. But it’s very difficult to quantify and involve numerous components – capital costs, avoided climate damages, increased employment, improved public health, etc.

In addition, some factors simply cannot be quantified in dollars. As Tufts economist Frank Ackerman has noted, “There are numerous problems with CBA [cost-benefit analyses], such as the need to (literally) make up monetary prices for priceless values of human life, health and the natural environment. In practice, CBA often trivializes the value of life and nature.”

Ethical and moral considerations

Consider a family that loses its home in a climate-amplified wildfire or hurricane. Quantifying the costs of replacing the home and belongings is do-able, but how to account for the psychological trauma of the event and for psychological damages, let alone for lives lost?

Moreover, rebuilding the home will create investments and jobs, which would dampen a disaster’s impact on the national economy. But as a society, we would consider these traumatizing losses quite harmful and well worth preventing for ethical reasons. For example, one study found that nearly half of low-income parents impacted by Hurricane Katrina experienced post-traumatic stress disorder.

Researchers have found that those traditionally underserved and having the fewest resources are the least able to adapt to climate change impacts. A team led by James Samson reported in a 2011 paper that internationally, those populations contributing the least to climate change tend to be the most vulnerable to its impacts. The higher temperatures resulting from global warming do the most harm in regions that are already hot, like developing countries in Africa and Central America that also have the fewest resources to adapt. While the United States is the country responsible for the most historical carbon pollution of any country on Earth, it’s geographically and economically insulated from the projected worst impacts of climate change that these poorer, less culpable countries will face.

This reality makes it more difficult for some to justify an expensive green mobilization based solely on accounting for just this country’s direct national economic interests, particularly when focusing on short time horizons. However, ignoring the harm done by our carbon pollution to the most vulnerable people – both within and beyond our borders – raises daunting ethical and moral questions.

Moreover, as Ralph Waldo Emerson put it, “To leave the world a bit better … that is to have succeeded.” That we are leaving behind a less hospitable world for our children and grandchildren might be considered our generation’s worst moral failure of all.

Political considerations

Finally, given that climate policies must be implemented by policymakers, the question of what’s politically feasible is critical, and for some perhaps dispositive.

As of mid-February, the GND Resolution had been co-sponsored by 68 members of the House and 12 in the Senate, but all were Democrats. Those co-sponsors include many of the hopeful and high-profile 2020 Democratic presidential candidates, though there are some exceptions and some party leaders still wavering.

On the other side of the political aisle, such a large government-run mobilization is generally incompatible with traditional Republican Party orthodoxy, let alone with the President’s views as the titular head of the party. Unless the Democratic Party in 2020 retains its current House majority and gains control of the presidency and of a clear majority in the Senate, passing legislation will require bipartisanship. That’s particularly true in the Senate, where most legislation requires 60 votes to overcome a filibuster, and a two-thirds vote to override a presidential veto.

The current Republican-controlled Senate (in session until January 2021) certainly won’t consider actual legislation involving a vast government climate mobilization, although smaller individual infrastructure components might be considered. There may be growing support for a bipartisan carbon tax bill – one potential component of a GND – but that and any other significant climate legislation also will likely depend on the winners of the White House and of House and Senate majorities in 2021.

from Skeptical Science https://ift.tt/2BX1d50

In the few weeks since it was introduced as a non-binding resolution before the U.S. Senate and House of Representatives, the Green New Deal (GND) Resolution has generated more discussion and coverage of climate change – positive and negative – among, by, and aimed at policymakers than we’ve seen in more than a decade.

The nonbinding initiative introduced by Rep. Alexandria Ocasio-Cortez (D-NY) and Edward Markey (D-MA) proposes embarking on a 10-year mobilization aimed at achieving zero net greenhouse gas emissions from the United States. The mobilization would entail a massive overhaul of American electricity, transportation, and building infrastructure to replace fossil fuels and improve energy efficiency, leading some to call it unrealistic, idealistic, politically impossible, and “socialistic.”

Analysis

Proponents of GND portray it as an early focus for meaningful climate policy discussion if political winds lead to changes in 2020 for the presidency and the Senate majority. They say the GND is the first proposal to grasp the scale and magnitude of the risks posed by the warming climate. And while begrudgingly accepting the insurmountable odds against full enactment before 2021 at the earliest, they see it as a worthwhile and long-overdue discussion piece.

Many commentators and policy analysts argue that the changes it calls for would be too expensive, radical, and disruptive. Others have argued that anyone who doesn’t support this sort of emergency transition away from fossil fuels is in denial about the magnitude of the climate problem. Many are confused about the Resolution’s vague contents, in part because Ocasio-Cortez’s office also released an inaccurate fact sheet that subsequently had to be retracted. That document provided early and low-hanging targets for those disposed to wanting to dampen GND enthusiasm.

A nonbinding ‘sense of the Senate’ resolution

Critically, GND must be recognized as a non-binding “sense of the Senate/House” resolution. It is not intended as proposed legislation, and certainly not as a specific climate policy bill. Think of it as being more of a framework on which to build actual climate legislation. In effect, a “yes” vote in either the Senate or the House would signify acceptance of climate change as a sufficiently urgent threat to merit full consideration of an expansive 10-year mobilization to transition away from polluting fossil fuels. In addition, the resolution isn’t intended to be exclusionary: at least five House co-sponsors are also co-sponsoring a revenue-neutral carbon tax bill (the Energy Innovation and Carbon Dividend Act).

Whether and exactly when the GND resolution will come to a full vote remains unclear, but Senate Majority Leader Mitch McConnell (R-KY) has said he will bring it to a vote in the Senate. It would likely pose an uncomfortable vote for those potentially vulnerable Democrats up for re-election in 2020 in “red” or coal-dependent states.

For Americans and their elected representatives, the decision whether to support this fundamentally transformative and sweeping resolution – provisions of which go well beyond those directly applying to climate change to include economic and social equity issues – hinges on four key factors. For politicians, the political considerations may weigh most heavily, but let’s deal with those last.

Science and physical considerations

The first consideration is the easiest from a scientific perspective: How much more global warming can occur before its net physical impacts become unacceptably negative?

The science community’s answer is that we’ve already passed that point; that it’s time to act now. Regions around the world are already experiencing more and more severe extreme weather events like heat waves, droughts, wildfires, and floods.

A paper recently published in Nature Communications found that Atlantic hurricanes are undergoing more rapid intensification as a result of global warming. Sea-level rise poses a threat to coastal communities and island nations. The one-two punch of warming and acidifying oceans is killing coral reefs, which are home to 25 percent of marine life. The recent IPCC Special Report found that “Coral reefs would decline by 70-90 percent with global warming of 2.7°F (1.5°C), and more than 99 percent would be lost with 3.6° F (2°C).” Species are dying out at a rate similar to past mass extinction events, with a new study finding that 40 percent of insect species are threatened with extinction. (For a breakdown of climate impacts in each region of the country, the Fourth National Climate Assessment is a wonderful resource.)

In short, if physical impacts were the only consideration, we would want to halt (and even reverse) climate change as quickly as possible. Of course, that’s not the case, which brings us to the second category.

Economic considerations

In a capitalist society, economic considerations are of course important to Americans. The projects involved in the GND mobilization would cost trillions of dollars, but curbing climate change could also prevent trillions of dollars in damages globally.

The GND includes a proposed jobs guarantee, envisioning that huge employment opportunities would arise to bring about the needed infrastructure overhauls. The transition away from fossil fuels would also yield further economic benefits, in terms of costs avoided, by reducing other pollutants, leading to cleaner air and water and healthier Americans. A 2017 study headed by David Coady at the International Monetary Fund estimated that fossil fuel air pollution costs the United States $206 billion per year and that when adding all subsidies and costs, the country is spending $700 billion annually on fossil fuels – more than $2,000 per person every year.

GND opponents counter that the economic costs of a vast 10-year mobilization would exceed the resulting economic benefits, but Stanford University researcher Jonathan Koomey suggests that we can’t predict just how fast of a transition to a clean energy economy would be optimal. Given the difficulties predicting technological breakthroughs and given that pathways with very different energy mixes can end up with similar costs, it’s impossible to say if the U.S. would be wealthier – from a strictly financial perspective – in a world that’s 2 or 3 or 4 degrees hotter.

People, and perhaps in particular politicians, tend to focus most on these economic considerations (and often just on costs while ignoring resulting benefits) to the exclusion of the others. But it’s very difficult to quantify and involve numerous components – capital costs, avoided climate damages, increased employment, improved public health, etc.

In addition, some factors simply cannot be quantified in dollars. As Tufts economist Frank Ackerman has noted, “There are numerous problems with CBA [cost-benefit analyses], such as the need to (literally) make up monetary prices for priceless values of human life, health and the natural environment. In practice, CBA often trivializes the value of life and nature.”

Ethical and moral considerations

Consider a family that loses its home in a climate-amplified wildfire or hurricane. Quantifying the costs of replacing the home and belongings is do-able, but how to account for the psychological trauma of the event and for psychological damages, let alone for lives lost?

Moreover, rebuilding the home will create investments and jobs, which would dampen a disaster’s impact on the national economy. But as a society, we would consider these traumatizing losses quite harmful and well worth preventing for ethical reasons. For example, one study found that nearly half of low-income parents impacted by Hurricane Katrina experienced post-traumatic stress disorder.

Researchers have found that those traditionally underserved and having the fewest resources are the least able to adapt to climate change impacts. A team led by James Samson reported in a 2011 paper that internationally, those populations contributing the least to climate change tend to be the most vulnerable to its impacts. The higher temperatures resulting from global warming do the most harm in regions that are already hot, like developing countries in Africa and Central America that also have the fewest resources to adapt. While the United States is the country responsible for the most historical carbon pollution of any country on Earth, it’s geographically and economically insulated from the projected worst impacts of climate change that these poorer, less culpable countries will face.

This reality makes it more difficult for some to justify an expensive green mobilization based solely on accounting for just this country’s direct national economic interests, particularly when focusing on short time horizons. However, ignoring the harm done by our carbon pollution to the most vulnerable people – both within and beyond our borders – raises daunting ethical and moral questions.

Moreover, as Ralph Waldo Emerson put it, “To leave the world a bit better … that is to have succeeded.” That we are leaving behind a less hospitable world for our children and grandchildren might be considered our generation’s worst moral failure of all.

Political considerations

Finally, given that climate policies must be implemented by policymakers, the question of what’s politically feasible is critical, and for some perhaps dispositive.

As of mid-February, the GND Resolution had been co-sponsored by 68 members of the House and 12 in the Senate, but all were Democrats. Those co-sponsors include many of the hopeful and high-profile 2020 Democratic presidential candidates, though there are some exceptions and some party leaders still wavering.

On the other side of the political aisle, such a large government-run mobilization is generally incompatible with traditional Republican Party orthodoxy, let alone with the President’s views as the titular head of the party. Unless the Democratic Party in 2020 retains its current House majority and gains control of the presidency and of a clear majority in the Senate, passing legislation will require bipartisanship. That’s particularly true in the Senate, where most legislation requires 60 votes to overcome a filibuster, and a two-thirds vote to override a presidential veto.

The current Republican-controlled Senate (in session until January 2021) certainly won’t consider actual legislation involving a vast government climate mobilization, although smaller individual infrastructure components might be considered. There may be growing support for a bipartisan carbon tax bill – one potential component of a GND – but that and any other significant climate legislation also will likely depend on the winners of the White House and of House and Senate majorities in 2021.

from Skeptical Science https://ift.tt/2BX1d50